|

Importance: High

Error «Redirect Blocked by Robots.txt» Description

Indicates addresses of the pages that return a redirect to a URL blocked by robots.txt. Note that the report will contain each URL from the redirect chain pointing to the blocked address.

The Importance of the Problem

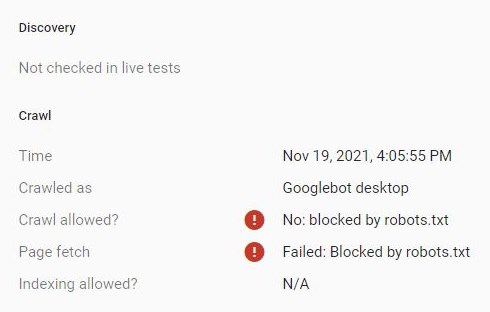

A redirect blocked by robots.txt occurs when the first URL is allowed for crawling, and the final address of the redirect or any other URL in the redirection chain is disallowed. In this case, the search robot follows the link, but cannot continue crawling, because it hits the blocked address.

Such redirects have a negative effect on site crawling and waste link weight. Thereby, important pages may receive less link weight and lower priority for the search robot → therefore, they will be crawled less frequently, get lower search positions, and drive less traffic than they could.

How to Fix Error

Delete internal links to redirects or replace them with links to available addresses.

After that, recheck redirect settings, there may be an issue in the final redirect URL. If there are no issues, block the initial redirect URL in the robots.txt, in order for the search robot not to crawl it.

|