Plenty of SEO specialists have struggled to get search engines to index certain pages of their websites. However, 90% of these problems are extremely easy to fix. The majority of them are caused by accidentally blocked pages in robots.txt, prevented indexing in the meta robots tag, incorrect canonical tags and similar issues.

Nevertheless, you cannot simply find and eliminate indexing problems. Once the issues are fixed, you have to get search engines to recrawl your important content as soon as possible. Make sure that your website and the specific pages are indexed.

In this article, we will share the known ways to get your webpages indexed by Google faster.

Ways to Improve Google Search Indexing

1. Google Search Console (GSC)

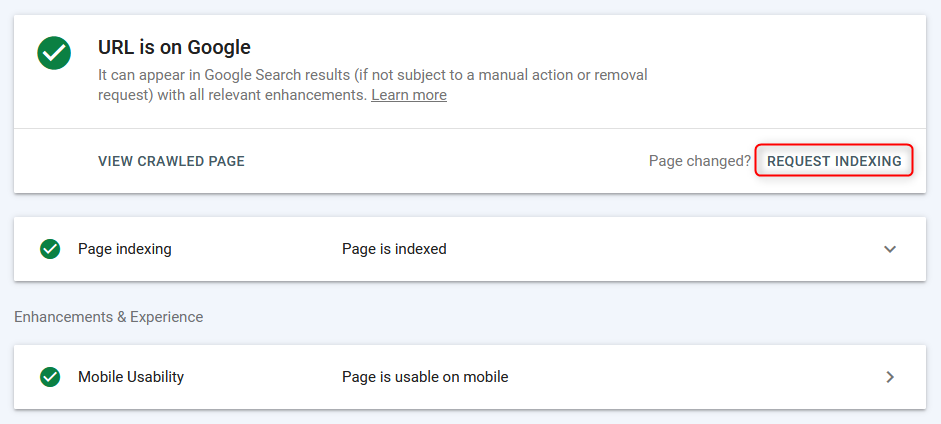

Google Search Console, formerly Google Webmasters, is the most common tool to index/reindex webpages in the «manual» mode.

Webpages are indexed quickly – sometimes it only takes a few seconds. However, you will have to separately request indexing for every single page. Moreover, it takes some time for the page search bot to scan the website. There is no way to list a bunch of URLs at once and make the search engine to recrawl them. As a result, this is an effective but inconvenient method.

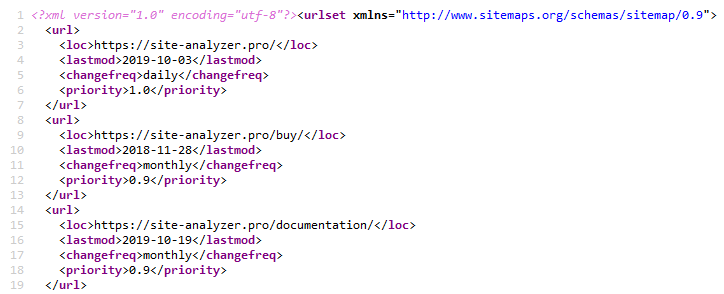

2. Sitemap.xml

Using XML sitemap is a classic way to speed up the indexing of new webpages. All you need to do is to specify the URL of your Sitemap.xml in the Robots.txt file (obviously, you need to create the Sitemap.xml file beforehand).

Usually, this is more than enough for any type of website.

Popular CMS offer myriads of different sitemap plugins. Additionally, you can create a sitemap with one of the web crawlers or desktop crawling software – such as SiteAnalyzer, which is available free of charge.

3. Pinging Sitemap.xml

Pinging forces the search crawler to do a quick recheck of your Sitemap.xml file. It is basically an alternative to submitting a sitemap to the Google Search Console. When doing so, you inform search engines about new content thus letting Google know that it needs to recrawl the sitemap file. Therefore, you increase the chances of having your webpage indexed – however, it does not mean that the search bot will immediately do it.

Ping your sitemaps to Google and Bing using these links:

- Google: http://google.com/webmasters/tools/ping?sitemap=https://site-analyzer.pro/sitemap.xml

- Bing: https://www.bing.com/ping?sitemap=https://site-analyzer.pro/sitemap.xml

4. Setting Up a 301 Redirect from a High Traffic Website

This solution allows you to partially transfer link juice and traffic from a high quality site to a website that requires more attention from a search engine or has indexing issues.

I tried putting a redirect from the «News» page of a high traffic website to a similar page of a site that had indexing problems. It only took Google two days to «notice» the redirect and the donor site page disappeared from the results page.

As soon as the page of the acceptor site is indexed, you can remove the redirect.

5. Remove a Website from Google Search Console and Resubmit It

The main idea is to make the search engine to «notice» the new content on your website and recrawl it.

Unfortunately, the experiment did not work. After the website was resubmitted, Google Search Console immediately displayed the old robots.txt file and all the other settings. Apparently, the website simply gets hidden from the list and repeatedly adding it only makes it visible again.

6. Close the Entire Website from Indexing

Let us try to prevent Google from indexing the entire website in the robots.txt file.

At the time of testing, the «first» version of the page with the WWW preface and without a saved copy (young site) was indexed. It took Google about 1-2 hours to discover the updated robots.txt version. The only previously indexed page disappeared from the index within the next 2 days. The website had 0 pages left in Google SERP.

After the site was completely removed from the Google Search index, the robots.txt file was refreshed for the Googlebot.

However, judging by the logs, the bot continued to visit the website once a day. In other words, the Googlebot crawl rate has not changed.

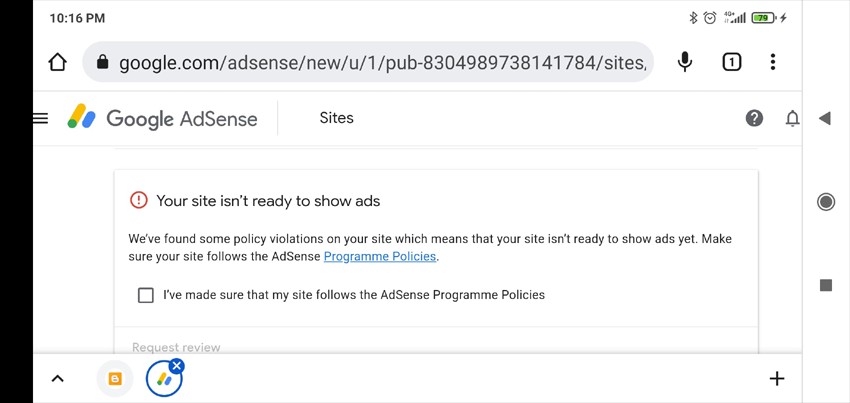

7. Connecting Site to Google AdSense

Usually, if a site fail to pass Google AdSense moderation, you can see the list of possible reasons in the personal cabinet. The verification takes up to two weeks – in my case, it took a week and a half. This information can help you identify what exactly is wrong with your website.

That is what happened in my case. It turned out the website did not have enough unique content or failed to provide a good user experience.

I do not think it has something to do with indexing issues. However, at least I got a more or less sensible answer, which may be useful next time – for instance, if you want to find out why Google sanctioned your website.

8. Recrawl a Website Using Google Translate

This method is based on the assumption that you can get Googlebot to recrawl your webpages by «driving» them through Google services.

Let us put the site's URL into Google Translator, make it translate the page, cross our fingers and hope for a miracle.

In my case, no miracle happened.

9. Drive Traffic from Social Media & Telegram

You can speed up Google indexing by sharing your content on social media (Twitter, Facebook, Linkedin, etc.) and making posts on Telegram (essentially the same thing as driving traffic from social media, but you advertise your website through posts on specific Telegram channels).

At the moment, social media links are not indexed by search engines and do not affect ranking, so this option is very unlikely to be effective. However, it may attract organic traffic to your site – as a result, search bots will visit your website more often and index more content.

10. Drive Traffic using Google AdWords

Create Google AdWords ad campaigns and drive traffic to your website's landing pages for faster indexing. Google Search bots will start visiting your pages more often so your indexing will improve.

11. Conduct a Mobile-Friendly Test in Google Search Console

Use the mobile testing page to make Googlebot recrawl new pages: https://search.google.com/test/mobile-friendly/result?id=28OJNYqIhMMT4grjojk-uw

Once again, the idea is that you can get Google to recrawl your website by conducting its mobile-friendly test. Nevertheless, I am not quite sure that this has any tangible result.

12. Drive Traffic from Pinterest

Pinterest is a popular content platform, which makes it appealing not only to visitors but also to search engine bots. Web crawlers – especially the Googlebot – are systematically browsing it. You can simply try to create pins in order to make search bots to visit your webpages.

In my case, this did not work.

13. Using Specialized Services for Indexing Pages and Links

As far as I know, this solution – the IndexGator service and its alternatives – are no longer working. I am not even sure if they ever worked in the first place.

According to the server logs, Google Crawler did not visit the website after this type of indexing.

14. Creating a Sitemap.txt

The Sitemap.txt file is a simplified version of the Sitemap.xml file. Essentially, it is the same list of a site's URLs, but it lacks additional attributes such as lastmod, priority, frequency, and others.

https://mysite.com/

https://mysite.com/page-1/

https://mysite.com/page-2/

https://mysite.com/page-3/

https://mysite.com/page-4/

https://mysite.com/page-5/

It is less common than the usual Sitemap.xml file. Nevertheless, you can add a link to it in robots.txt and the search bots will start scanning it without any issues.

15. Internal Re-linking

You can try to speed up your website's indexing by adding links to the new pages from «hub» (high traffic) pages.

It can be done with a script, which takes unindexed links from a list and places them on the most visited pages. As soon as the webpages are indexed, the next bunch of links gets listed, and so on. You can check how many webpages of your website are indexed using Google Webmaster Tools API or one of the many web-services.

16. External Link Building

Google acknowledged that pages are considered trustworthy if they have backlinks from reputable sites.

If your website has backlinks, Google considers your webpages important and indexes them.

Thus, you can use external link building to promote specific pages or the website as a whole. This way you will get Google to pay more attention to your site and index new content more often.

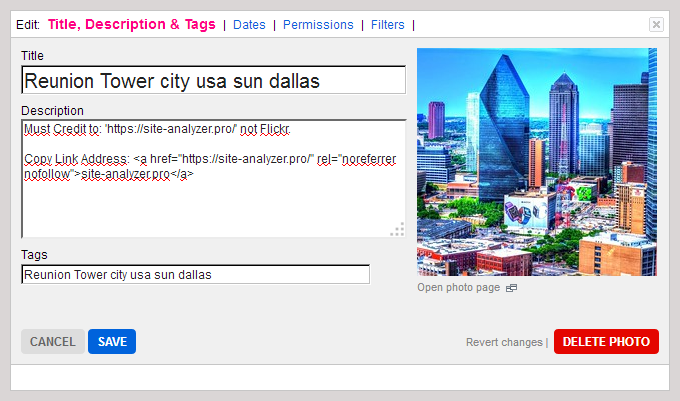

17. Creative Method Using Flickr (by Victor Karpenko)

Create a Flickr account and add a bunch of photos – thousands, preferably tens of thousands. It does not matter what photos to use – feel free to get them at other websites, Instagram, etc.

Put a backlink to your website (do not forget to mention its URL) in the description for each photo.

As a result, you can get free traffic and dozens of links every month from trustworthy websites and media.

Note. This strategy is about link building, but it also lets you drive traffic to specific pages of a website and thus improve indexing.

18. Using the Google Indexing API

Here is a short manual for PHP provided by Mahmoud:

- Register a Google Cloud service account, get access key (json file), grant service account user permission to server console, and enable API in google cloud.

- Create a PHP script, which will be used to add URLs to the index.

- Download the API client from GitHub: https://github.com/googleapis/google-api-php-client

- Use the following code for batch add requests:

require_once '/_google-api-php-client/vendor/autoload.php';

$client = new \Google_Client();

$client->setAuthConfig('/_google-api-php-client-php70/blablabla-777c77777777.json'); // path to json file received when creating a service account

$client->addScope('https://www.googleapis.com/auth/indexing');

$client->setUseBatch(true);

$service = new \Google_Service_Indexing($client);

$batch = $service->createBatch();

foreach ($links as $link) // URLs list

{

$postBody = new \Google_Service_Indexing_UrlNotification();

$postBody->setUrl($link);

$postBody->setType('URL_UPDATED');

$batch->add($service->urlNotifications->publish($postBody));

}

$results = $batch->execute();

print_r($results);

- $batch->execute() sends an array by the number of links in request (if the response has \Google\Service\Exception, code 429 – Too Many Requests, 403 – Forbidden, caused by problems with access key or API, 400 – Bad Request, caused by invalid or corrupted requests).

- Link to the errors list: https://developers.google.com/search/apis/indexing-api/v3/core-errors?hl=ru#api-errors

- Do not forget to associate the API account with Google Console + set yourself as the owner. Otherwise, the script will not work (you will face the 404 error – Permission denied. Failed to verify the URL ownership).

Note. It took me around 40 minutes to create this script, including the time wasted on googling errors and fixing the minor mistakes.

19. Blocking Access to Googlebot (Vladimir Vershinin's case)

I noticed that Google does not index documents properly in one of the projects. We have tried different solutions but none of them worked.

I had a hunch that search bots could not retrieve anything from resources because they get cut off by the DDoS protection service.

On the 26th, we have added /ipranges/ to the whitelist of or DDoS protection service. On the 27th, we already had +50k documents in the index. It has been 15 days since then and Google has indexed +300k documents.

Most of the documents were detected by the GSC as crawled, but not indexed yet.

List of Googlebot IP addresses: https://developers.google.com/search/apis/ipranges/googlebot.json

20. Contact John Mueller

You can try contacting John Mueller on Twitter. Describe your problem, cross your fingers and wait for an answer.

It did not work in my case. No one responded to me and the new pages were not indexed. Quite strange, but what else can I do?

Who knows, maybe you will get lucky!

***

It seems that there are no more known options to speed up the indexing of both new and old webpages. However, if you think that I have missed something, please share your own cases and examples in the comment section. I will be happy to add them to the article.

P.S. To make sure we have completely covered the topic, it is worth mentioning a rather new strategy of indexing a bunch of pages at once using IndexNow – an open-source API that lets you provide search engines with a list of up to 10,000 URLs per day. It only supports Yandex and Bing right now. It is possible that you will be able to use it for Google in the future – however, there is also a chance that it will never happen.

Other articles:

5,028

5,028