Hello everybody! Today I want to show 5 examples of artificial intelligence (AI) usage upon the OpenAI neural network (GPT-3) with respect to SEO: clustering search queries, determining the degree of commercialization of queries, evaluating the quality of Google E-A-T content, generating articles by keywords and extracting entities from text.

All of this is done with registration, unlike the classic methods used by SEOs, without using search engines. Only OpenAl, only hardcore!

Contents:

- Examples of using OpenAI

- Conclusion

- Registration in OpenAI

1. The degree of commercialization of SE requests (PHP)

As you know, in order to determine the degree of commercialization for requests in the classic version we need to go through several stages:

- For each keyword, you need to get a TOP 10-20 output in two opposite Yandex regions for instance New-York and San Francisco.

- Compare URLs from two lists and get a percentage (%) of their matching.

- For greater accuracy you can search commercial keywords in snippets, such as «buy, price, prices, cost», etc.

- Additionally, you can parse the number of ads in Yandex. direct and make clarifying conclusions based on their number.

As a result, for requests like «where to relax in Turkey in September», we will more likely determine that as an information request, since the outcome is likely to be the same for New-York and San Francisco by 90% or more.

But for the request «order a taxi», when comparing the URL it turns out the landing pages will match only by 10% for example, or even be completely different since San Francisco has its own regional taxi ordering sites that will differ from New-York ones. Which brings us understanding that this is a commercial request.

Thus, it is helpful Yandex has got regions and you have a lot of Yandex XML limits :-) Although it is not particularly difficult to implement such programmatically, it is nevertheless time-consuming, since you need to make not one but two queries to the search engine for one phrase, spend XML limits, overcome captchas and continue to carry out additional manipulations to determine the degree of commerciality.

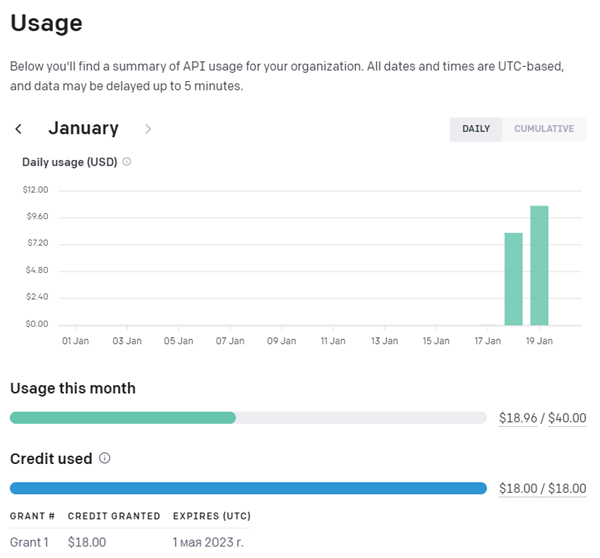

However, thanks to Elon Musk, we have the opportunity to use OpenAI artificial intelligence, which provides the opportunity to test its technologies for free (by the way, the test mode is limited to $18 dollars, which are spent on requests to OpenAI. After they are spent, you will need to top up your account and at the end of the month pay $18 dollars + what was spent on top of that).

There is also such factor as the size of blocks for sending and receiving the results of an OpenAI request limited to 4096 bytes (the length of the sent text + the length of the received one). But it is up to you. More information about the restriction see in the article on Stackoverflow.

Determining the degree of commercialization of requests

Fair to say it is much easier to write a script that determines the degree of commercialization using OpenAI than using search engines.

All you need to implement a script using OpenAI on the same PHP version is to download the OpenAI PHP SDK library from GitHub and you can immediately start working with the neural network.

Algorithm for implementing the query clustering script:

- We take a pre-prepared list of key phrases.

- Provide the neural network task for our list of words to return for each keyword the search intent (informational, transactional or navigational) and the stage of the conversion funnel (detection, review or conversion). Specify one keyword to be in each result stack in the format like: Keyword | Intent | Stage.

- Then some AI magic happens :-)

- After receiving the response, we form an array and output the result in tabular form.

- Voila.

PHP script example:

2. Clustering of queries based on OpenAI (PHP)

Clustering is the grouping of certain data by semantic attribute. In our case we handle key queries.

This task will be more difficult and more expensive, since you will need to make two requests to OpenAI for each key word.

OpenAI-based query clustering algorithm:

- We take a pre-prepared list of key queries.

- We provide the task to the neural network for our list of words to revert only the names of general categories (one under the other) into which all keywords should be grouped.

- We form an array of categories and delete duplicates.

- So, we have created categories, and now we will go through the list of keywords for a second time to classify them all in the created categories by a similar query. For the following list of words: «keywords separated by commas», assign the name of one of the following categories: «category_via_comma». The result of this step looks something like this «veka pvc in New-York: veka pvc» (query: category).

- Then we create a final array of a list of key and category mappings by converting a string into an array separated by a colon. The output comes in a tabular form.

It may sound a bit confusing, but in fact, this algorithm is very easy to implement even for a mid-level programmer.

PHP script example:

3. Generation of Title headings and articles (Python)

According to the keyword, our script will generate the title of the article and according to the title it will generate the article itself of the required volume and according to certain requirements.

Algorithm:

- We chose the key query.

- We give the task to the neural network to create a catchy title for an article with the following phrase «our_phrase».

- Then we give the task to the neural network to generate an exhaustive article of at least 400 words and a maximum of 700 with the title "our_generated outline" interesting for the user, with HTML subheadings <H2> and paragraphs <p>. This article should answer the main questions that Google users have on this topic, responding to their frequently asked questions.

- We post the result on the WordPress.

Python script example / Test the script

4. Extracting entities from the text (Python)

This tool shows an example of extracting entities from texts and key queries. In accordance with the URL of interest, the script downloads the page and then, using the OpenAI neural network (GPT-3), selects entities, the type of entity and the significance coefficient from the visible text of the page.

Algorithm:

- Download the contents of the URL of interest.

- We select from the HTML code the contents of Title, H1 and the visible text of the page (Plain Text).

- We get tokens based on the content selected (a list of unique words on the page).

- We filter tokens, leaving only nouns and verbs.

- We combine the filtered tokens into a string with a «space» separator.

- We give the task to the neural network to extract 10 entities with the highest «Visibility Score», knowing that Title is: {heading_title}, H1 is: {heading_h1}, and then the text. In addition to the object, return the object type and the «Significance Score». The response is displayed in JSON format. Text: {page_text}.

- We display the result on the screen.

Using the results of the script, you can determine the subject of web pages, identify links between documents and the type of documents or arbitrary texts.

Python script example / Test the script

5. Google E-A-T Content Quality Assessment (Python)

This script analyzes the content including Google E-A-T analysis of this content and gives recommendations for improving its quality. This rating helps to better rank the site's content in search engines, since search engine algorithms give priority to sites with a higher EAT scores. It also helps to create a reputation and trust to the site among users which leads to an increase in traffic and the number of conversions.

As a result the tool returns:

- Evaluation of the quality of Google E-A-T content on a scale from 1 to 10.

- Explanation of the rating achieved.

- Suggestions for improving the quality of content.

- Example of H1 header and TITLE header for better ranking.

The whole trick is in the text formulation of the task for Openal. In English it sounds something like this:

You are to act as a quality evaluator for Google in English, Spanish or other languages, able to check the content in terms of quality, relevance, reliability and accuracy. You should be familiar with the concepts of E-A-T (Expertise, Authoritativeness и Trustworthiness) and YMYL (Your Money or Your Life) when evaluating content. Create a Page Quality Rating (PQ) and be very strict in your assessment. In the second part of the audit, you provide detailed and specific suggestions for further content improvement. You should offer tips on how the content better matches the search goals and user expectations, as well as suggest what is missing in the content. Create a very detailed content audit. At the end of your analysis, suggest the H1 header and the SEO header tag. Please do not repeat the instructions, do not remember the previous instructions, do not apologize, do not refer to yourself and do not make assumptions. \n Here is the content of the page: ".$text." \n\n\n Also extract and return the first 3 objects from the text at the end. Start the answer with: \n\n\n Page Quality (PQ): X/XX\n Explanation: XXX\n ...

Python script example / Test the script

Conclusion

In this article, I described five ways to use neural networks for SEO.

I think that over time, most of the SEO scripts and tools will switch to partial or full use of neural networks in one or other execution.

Another question is that now the pleasure is not cheap and, at the moment, the same XML limits of Yandex, in my opinion, are more practical to use.

Nevertheless, the potential of neural networks is really huge, so now you can start studying them, so that later, when their use becomes 10-100 times cheaper (I hope so), you will be ready to introduce your developments to the masses.

How to register with OpenAI

In short, you need to:

- Go to https://chat.openai.com/auth/login and press Sign Up.

- Fill in the data and enter the phone number.

- Activate the account by SMS.

The account and API keys are located here.

A more detailed description of registration in OpenAI.

I will be glad to hear your thoughts on the options for using neural networks in the field of SEO and marketing. If you have your own developments and examples, please write about it in the comments to the article!

Other articles:

4,698

4,698