Hello everyone! It has been a little over a three months since the last SiteAnalyzer update. We have already released the new version 2.8, which is great news!

The new version of SiteAnalyzer includes a number of new features. We have added a set of 6 domain analysis modules, implemented the functionality to use and manage cookies for website parsing, added the ability to automatically export reports of selected projects to Excel, and fixed the issues that could occur during the program registration. Read more about the latest update below.

Major Changes

1. Domain Analysis

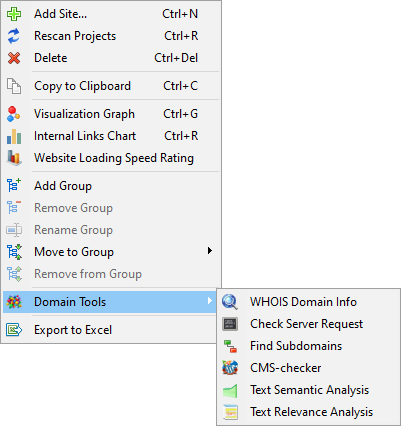

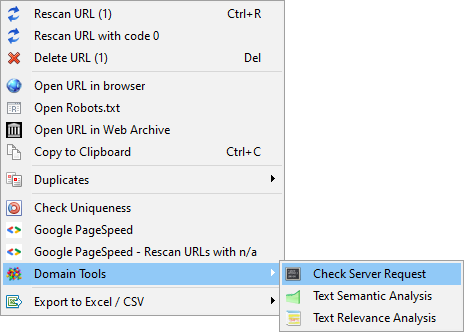

We have added a set of 6 domain analysis modules to the new version of SiteAnalyzer. It allows our users to easily analyze websites without using third-party online services. The domain analysis modules can be accessed through the context menu of the project list.

The list of modules:

- WHOIS Domain Info

- Check Server Request

- Searching for subdomains

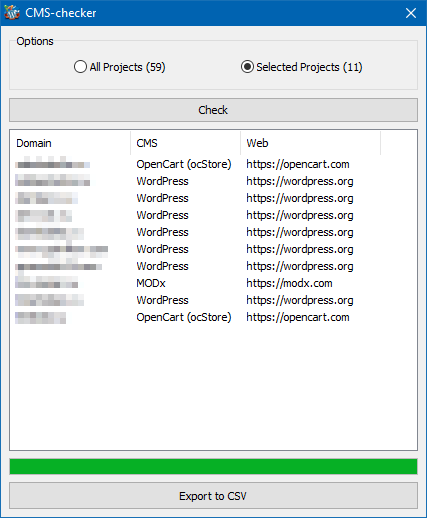

- CMS-checker

- Text Semantic Analysis

- Text Relevance Analysis

Most of these analysis modules supports batch processing of domains and allows exporting the received data to a CSV file or clipboard.

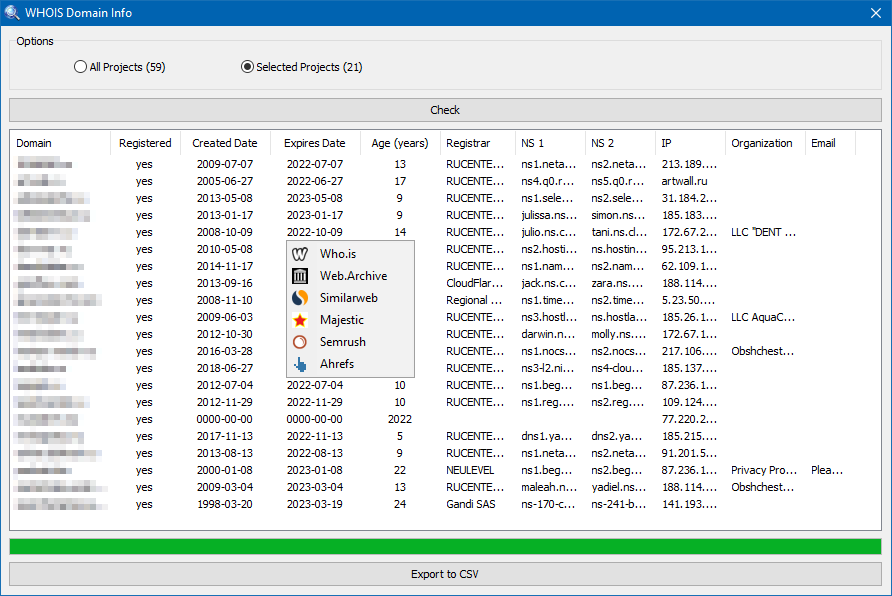

The tool is designed for mass lookup of the domains age and their major parameters. Our WHOIS checker will help you to determine age of multiple domains in years, as well as display the WHOIS data, domain name registrar, the creation and registration expiration dates, NS servers, IP addresses, organization names of the domain owners, and contact emails (if specified).

Additionally, the context menu allows you to get even more information on each website. Check traffic on Similarweb, go to the saved copies of the site on Archive.org, and conduct a detailed audit of multiple links using Majestic, Semrush or Ahrefs by visiting one of the services.

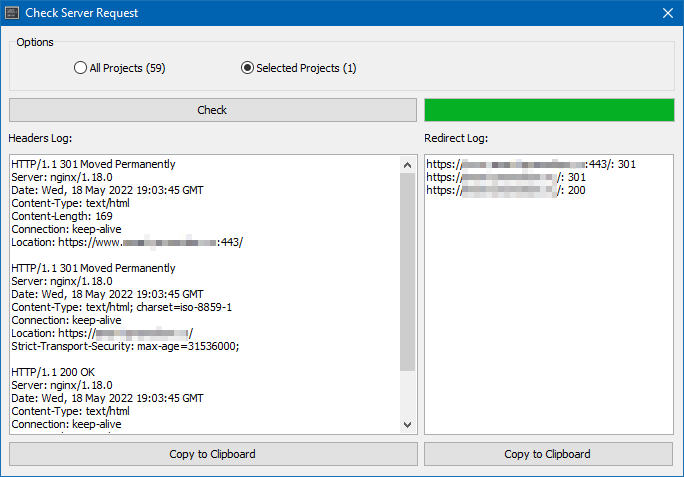

This module allows you to check what response code is returned by the server when one of its pages is accessed. The 200 (OK) HTTP code is the standard response for successful HTTP requests. Non-existent pages return the 404 (Not Found) code. There are also other server response codes, such as 301, 403, 500, 503, etc.

In addition to the response code itself, the script displays all the headers sent by the server (response code, time on a server, type of the server and its content, the presence of redirects, etc.).

Additionally, it is possible to check the server response and the list of all the redirects in a popup form using the context menu of the site’s URLs list.

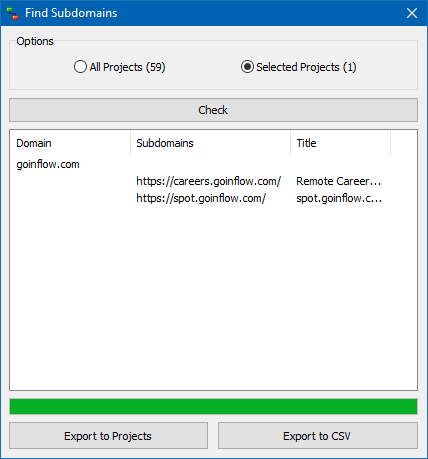

The tool is designed to look for subdomains of a specific website. The subdomains check will help you analyze the promoted site. It identifies all of its subdomains, including those that were "forgotten" for some reason or the indexed ones that had been used for tests. The module is also helpful if you want to analyze the structure of large online stores and portals. The "Find Subdomains" tool is based on specially constructed queries to various search engines. Thus, the search is conducted only on the subdomains of the desired web resource that are indexed.

Additionally, you can export the found subdomains to the list of projects.

The module is designed to automatically determine the CMS of a website or a group of websites. Determining the type and name of the CMS is conducted by searching for certain patterns in the source code of the site pages. Thus, the module allows you to determine the CMS that runs dozens of specific websites in one click. You will not need to manually study the source code of the pages and the specifics of various content management systems.

If the content management system you use is not in our database, feel free to contact us via the feedback form. We will add your CMS to our list in the shortest.

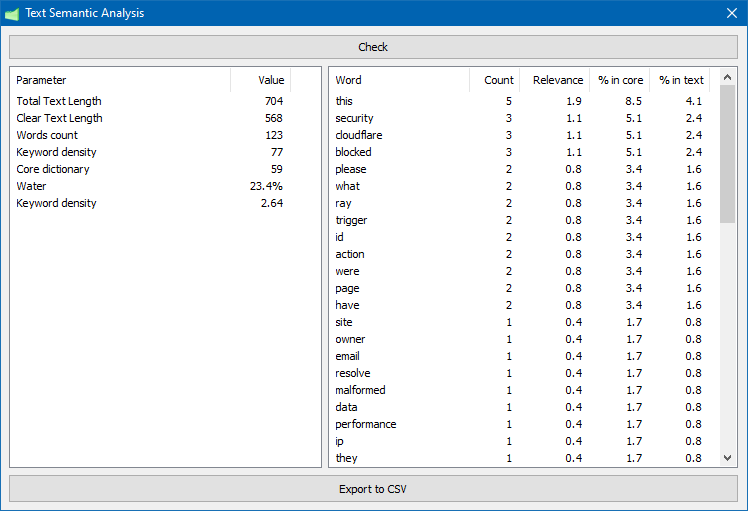

Text Semantic Analysis (keyword density analysis)

This text semantic analyzer analyzes the main SEO parameters of the text, including: text length, number of words, keyword density analysis (nausea), text readability.

You can conduct the semantic analysis for any page of the website through the context menu of the project URLs list.

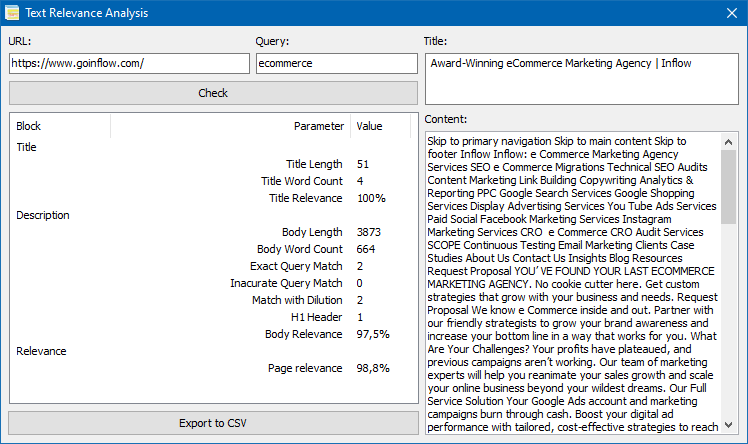

Text Relevance Analysis (on-demand SEO analysis)

This tool is designed specifically for SEO-specialists. It allows you to conduct a detailed content analysis of a specific page for its relevance to specific search queries.

The main criteria by which the page is identified "ready" for promotion are: the presence of the h1 tag, the number of keywords in the TITLE and BODY, the number of characters on a page, the number of the exact query occurrences in the text, etc.

Following these rules, you can improve the site’s ranking and not only optimize the page for the search engine, but also make it more useful for the user, which will lead to a better user experience and a higher conversion rate (increase in orders, more targeted calls).

Thus, using this tool, you can vary the number of keyword occurrences on the page, as well as edit its content and meta tags to find the optimal keyword density. It will improve your search engine rankings.

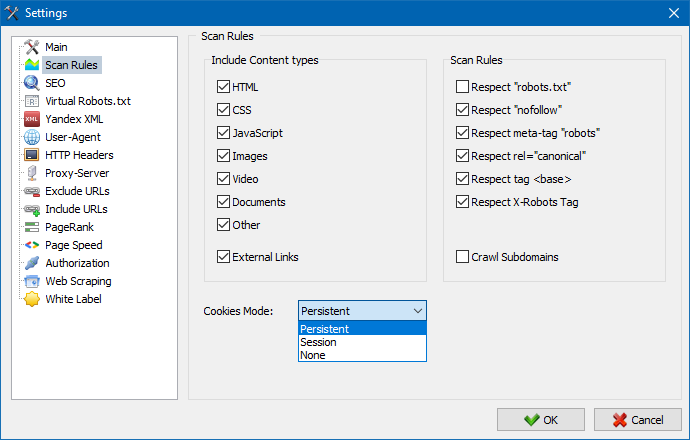

2. Cookie management

At the request of our users, we have added the ability to use cookies in the program, as well as exporting all the cookie information to Excel format.

Cookies are small pieces of text data sent by a web server and stored on a user's computer. These files usually include information about the visitor, as well as other service data (such as the date of visit, their device, the products they were interested in, their IP address, the session length, and so on). Cookies allows you to identify each specific user of the website.

Plenty of websites have protection against third-party scripts and programs to avoid parsing. As a result, you need to use cookies when parsing most websites – otherwise, the automatic protection will not let you scrape the site’s content.

SiteAnalyzer offers 3 types of cookie management:

- Permanent – select this option if the site cannot be accessed without cookies. It is also recommended to count each request within the same session. Otherwise, each new request will create a new session. This options is enabled by default.

- Sessional – in this case, each new request will create a new session.

- Ignore – turn off cookies all the time.

We recommend using the first option, as it is the most universal and allows you to crawl most websites on the internet without any issues.

Cookie management options are configured in the main program settings on the "Scanning" tab.

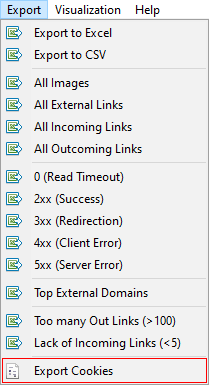

It is also possible to export the list of all cookies of the active website to a text file using the "Export Cookies" button through the main menu of the program.

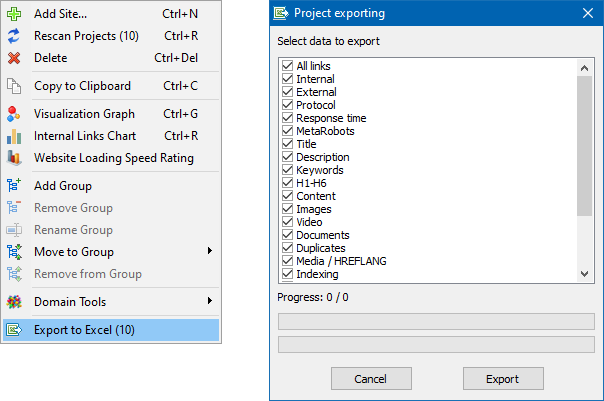

3. Export Selected Projects to Excel

This feature allows you to export reports of the selected projects to Excel format without manually exporting each project. This feature works automatically when you specify the end folder if more than one project is selected for export. Otherwise, only the current project is exported.

For convenience, a separate file with the project name is automatically created for each website.

4. Entering the Registration Key

We have fixed the issues that could occur during the program registration. From now on, the registration key will be accepted without any problems.

Other Changes

- Fixed a bug in the dashboard that occurred when switching between projects, when the main site quality indicators were summarized.

- Fixed a bug that occurred when exporting a project with an open tab with missing data. In this case, export used to be unavailable.

- Optimised Sitemap generation algorithm for images (images are now generated relative to Google recommendations).

- Fixed the redundant count of the ALT tags content for favicons (ignoring the ALT for files inside the HEAD tag).

- Fixed a bug when the program sent incorrect headers to the server during the website scan.

- Optimized the feature of exporting user-visible fields to Excel instead of all the existing fields.

- Fixed a server connection error that occurred when checking for version updates.

- Fixed incorrect parsing of the ALT and TITLE tags for images.

- Fixed the duplicate count of the H1 and Description tags.

- A button has been added that displays the data export menu on the Info panel.

- Added the ability to rescan 3xx redirects.

News about last versions:

4,780

4,780