Hello everyone! In the new version of SiteAnalyzer 2.2, we added several long-awaited features and refined some of the existing tools, making them even more convenient. Read more about the latest update below.

Major Changes

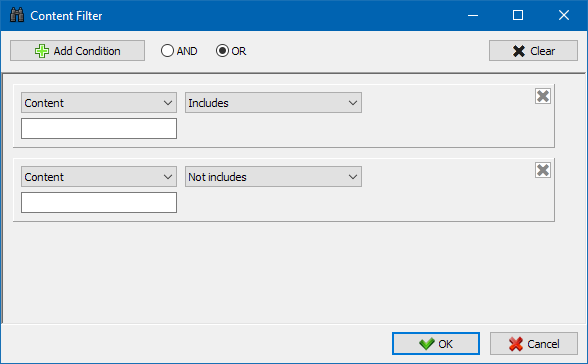

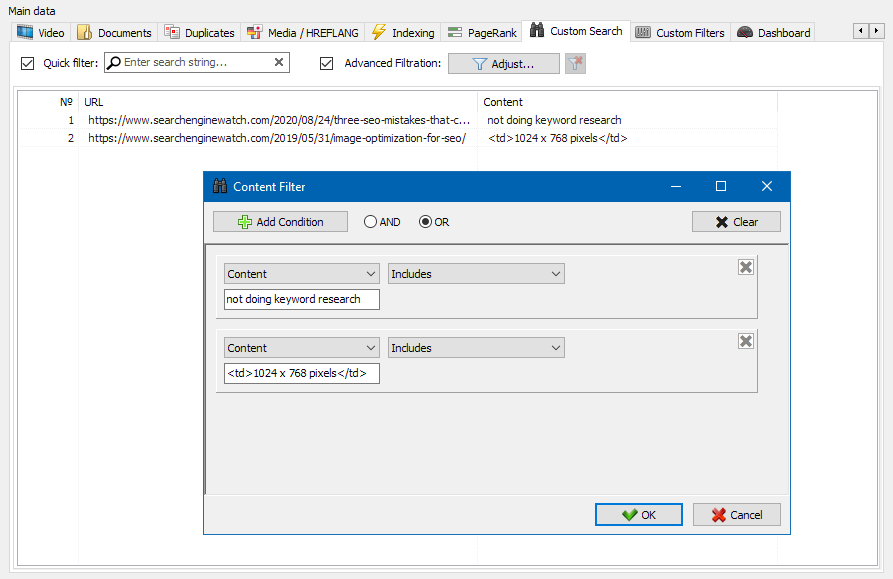

1. We added custom filters module to search for content on websites during crawling.

By popular demand, we added the long-awaited content search feature. It can be used to search the page source code and display web pages that contain the content you are looking for.

The custom filters module allows searching for micro-markup, meta tags, and web analytic tools, as well as fragments of specific text or HTML code.

The filter configuration window has multiple parameters to search for specific text on a website’s pages. You can also use it to exclude certain words or pieces of HTML code from a search (this feature is similar to the source code search of a page with a Ctrl-F).

Note. You can try the custom filters module yourself to see how it works. Select the "Does not contain" setting in the drop-down list and then enter your brand name in the text input field. As a result, you will get a list of pages that do not contain your brand name. This will help you to find out why the templates of these pages are different from the templates of the main site.

Go to the Documentation section to learn more about the custom filters module and how it works.

2. We added internal links chart of a website.

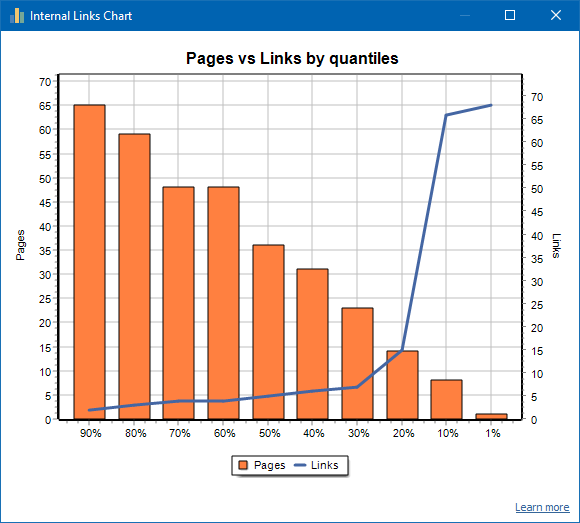

This chart shows the link juice of a website. In other words, it is yet another visualization of internal linking in addition to Visualization Graph).

Numbers on the left side represent pages. Numbers on the right side are links. Finally, numbers at the bottom are quantiles for each column. Duplicate links are discarded from the chart (if page A has three internal links to page B, they are count as one).

The screenshot above shows the following statistics for a 70-page website:

- 1% of pages have ~68 inbound links.

- 10% of pages have ~66 inbound links.

- 20% of pages have ~15 inbound links.

- 30% of pages have ~8 inbound links.

- 40% of pages have ~7 inbound links.

- 50% of pages have ~6 inbound links.

- 60% of pages have ~5 inbound links.

- 70% of pages have ~5 inbound links.

- 80% of pages have ~3 inbound links.

- 90% of pages have ~2 inbound links.

The pages that have less than 10 inbound links have a weak internal linking structure. 60% of pages have the satisfactory number of inbound links. Using this information, now you can add more internal links to these weak pages if they are important for SEO.

In general practice, pages that have less than 10 internal links are crawled by search robots less often. This applies to Googlebot in particular.

Considering that, if only 20-30% of pages on your website have a decent internal linking structure, then it makes sense to change them. You will need to optimize the internal linking strategy or find another way to deal with 80-70% of weak pages (you can either disable indexing, use redirects, or delete them).

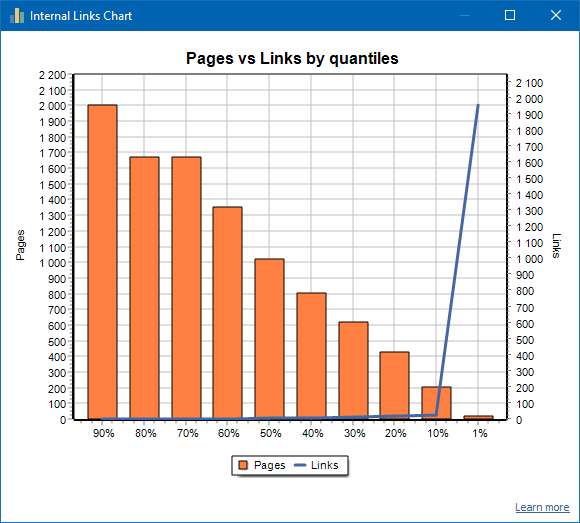

Here is an example of a website with a poor internal linking structure:

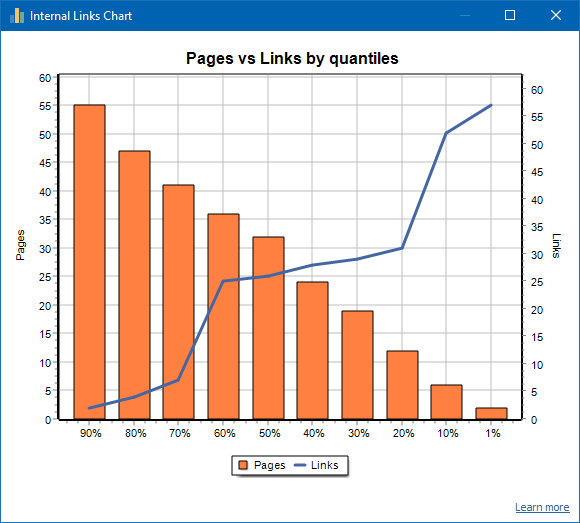

And here is a website with a decent internal linking structure:

Go to the Documentation section to learn more about the internal links chart.

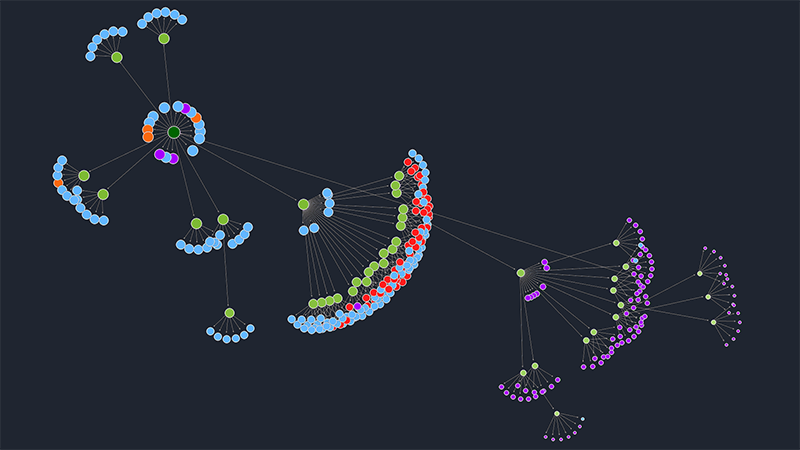

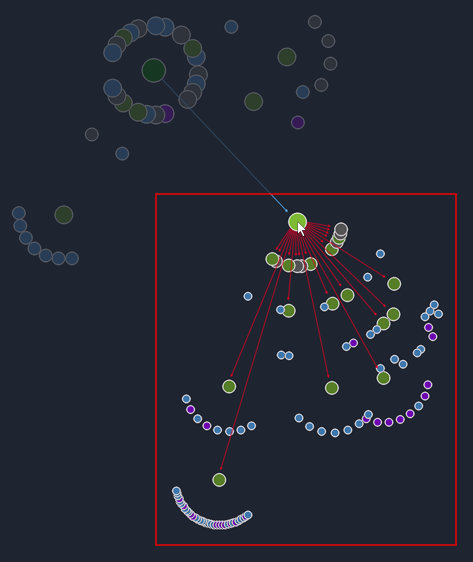

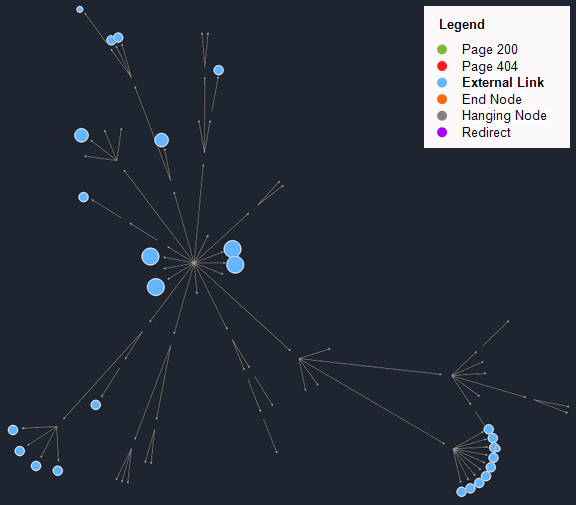

3. We refined the interface of visualization graph.

- When dragging a node of a graph, its child elements are dragged together with it.

- When clicking a node of a graph, inbound and outbound links have different colors now for clarity.

- The visualization graph legend is interactive now: when clicking the elements in the legend, the corresponding nodes are highlighted on the graph.

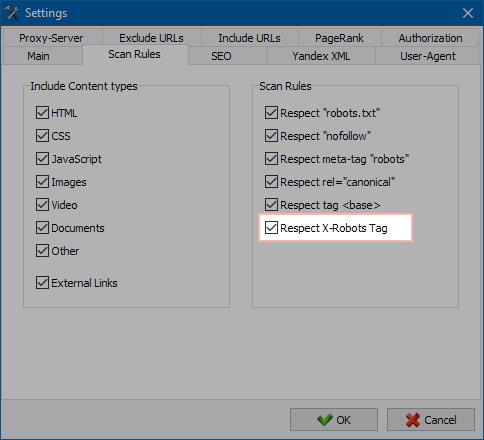

4. X-Robots Tag option added to the crawling settings.

Now you can activate or disable the X-Robots Tag parameter in the crawling settings. Before, it was only displayed in statistics.

Note: X-Robots-Tag HTTP is a response header of an URL. It can be used with the same directives as robots meta tags.

Other Changes

- Optimized parsing for H1-H6 headers that utilize classes.

- Software hang during the crawling of large projects is eliminated.

- Fixed incorrect statistics representation of duplicate meta descriptions.

- Fixed incorrect statistics representation of 404 pages.

- Blocked URLs in Robots.txt now have status code "600".

- Response time parameter is calculated more accurately now.

- Fixed incorrect Sitemap.xml generation.

- Redirects are displayed more accurate now.

- Sorting by URLs is more accurate now.

News about last versions:

4,321

4,321