Hello everyone! In the new version of SiteAnalyzer, the possibility of virtual site scanning (the «Virtual Crawl» function) has been added, and the display of file rules for Robots.txt has been added for pages that are prohibited from indexing. Also, the description of errors displayed by the filters of the SEO Statistics tab has been added. Let's have a closer look at the details of the new version.

Major changes

1. Virtual Crawling

Virtual scanning via «Virtual Crawl» is implemented by scanning sites without using a database, which eliminates losses when the scanner interacts with the program database and scanning runs much faster on slow HDD or SSD drives. All data is stored virtually and available only during program startup and before switching to another project.

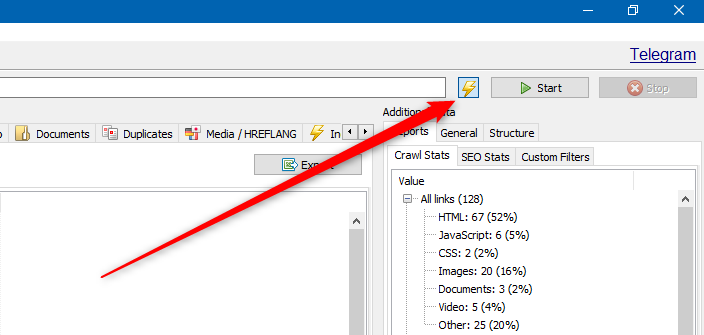

Virtual scanning is implemented through a switch located next to the «Start» and «Stop» buttons on top panel of the program.

- When the switch is turned off, sites are scanned in classic mode.

- When the switch is pressed, sites are scanned in virtual scanning mode.

Pros and cons of the «Virtual Crawl» mode:

- The disadvantage of this mode is that the project data is relevant only at the time of the program running and before switching to another project.

- At the same time, the advantage is the quick addition and analysis of the project, as well as an increase in the scanning speed on slow HDD or SSD drives.

- The results of scanning in the «Virtual Crawl» mode completely duplicate the functionality of scanning the list of projects (filters, sampling, visualization graph, etc.).

Note. The «Virtual Crawl» mode is available only on a chargeable tariff plan.

2. The Rules of Robots.txt for pages that are prohibited from indexing

At the request of users, the display of file rules has been added to Robots.txt for pages being restricted for indexing.

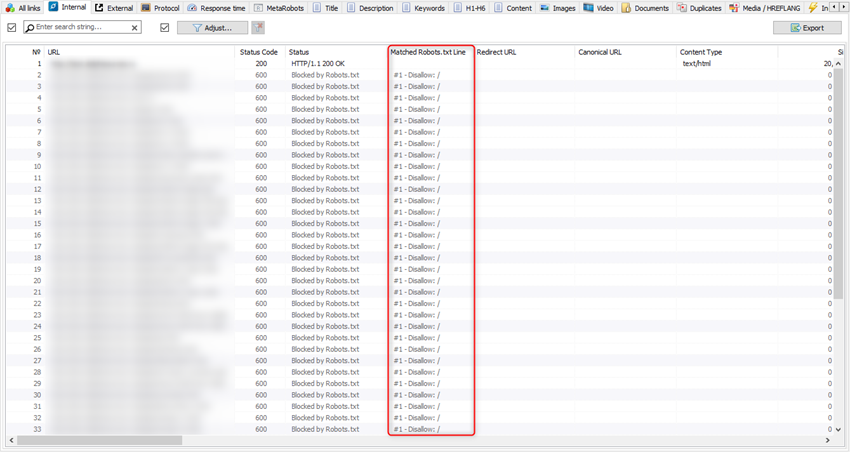

Now Site Analyzer will automatically detect the pages that are blocked in the Robots.txt file and will also display a rule prohibiting their indexing.

This is a really convenient and visual reporting that allows you to audit a file Robots.txt. Often site owners check the URLs one by one to see if they are blocked through a file Robots.txt. Thus, unlike very non convenient «manual» tests, you can conduct a massive URL analysis to ensure the exceptions in Robots.txt work properly.

How to check it in SiteAnalyzer:

1. Run the Site Analyzer.

2. Scan the site of your interest.

3. After the scan procedure is complete, go to tab «Internal > Matched Robots.txt Line».

4. Now you can see all the pages blocked by the Robots file.

5. In the column «Matched Robots.txt Line» the line number and the rule blocking the scanning of this URL will be specified.

This can be useful if you have a large website with a lot of complex Robots rules. Testing one URL after another can be useful, but it is also difficult to understand how a certain rule applies to the entire site. This functionality will essentially allow you to test Robots.txt file on a site-wide scale.

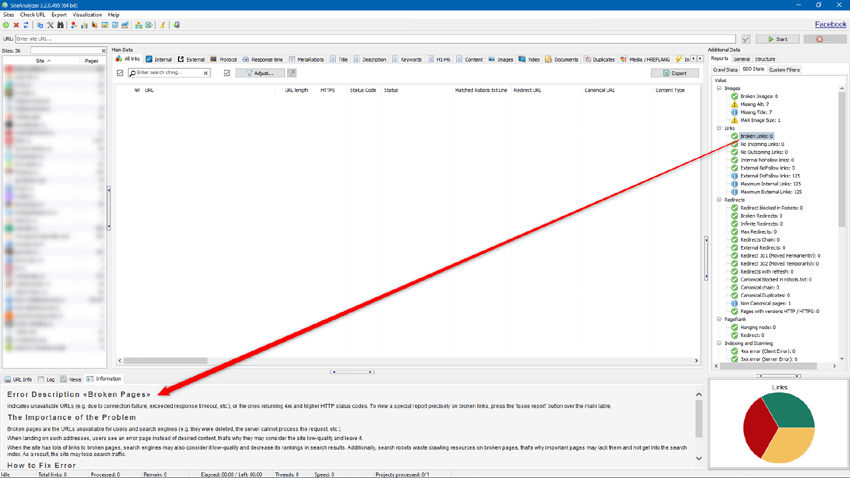

3. Detailed descriptions of errors by SEO-filters

For better understanding how the filters work in the SEO Statistics tab, a detailed description of errors for each of the corresponding filters (Information tab) has been added to the bottom panel as well as possible reasons for errors and recommendations for their elimination, if possible.

Other alterations

- Fixed incorrect proxy operation when running the program and verifying the license key.

- The maximum possible value of the «Request Timeout» parameter has been increased up to 3600 seconds.

- Virtual Robots.txt it has become individual for each site.

We will be glad to receive any comments and suggestions on the functionality and development of the program.

***

Follow us on Facebook! https://www.facebook.com/siteanalyzer.pro/

See you soon!

News about last versions:

4,073

4,073