Frequently Asked Questions

- Why SiteAnalyzer is better than its analogs (Screaming Frog, Sitebulb, Netpeak Spider, etc.)?

- Can SiteAnalyzer Scan 1 Million Pages or More?

- How Many Iterations Are Recommended for PageRank Calculation?

- How to Preserve All Projects When Updating SiteAnalyzer

- What Does "Lack of Resources" in the Program Log Mean?

- Sites are not scanned through Tilda, why?

- Can SiteAnalyzer Scan Websites Built with JavaScript?

- Why Does the Scanner Detect 403 Errors When Pages Load Fine in Browser?

- How Many Threads Are Optimal for Parsing Large Sites?

- The Key Is Already Registered – What to Do?

- The Program Won't Scan the Site – What Should I Do?

- How do I bypass a site on Cloudflare?

- Can't Register the Program? Here's What to Do

- How to Limit Site Scanning to 4 Requests Per Second

- SiteAnalyzer System Requirements

- Is SiteAnalyzer Compatible with Linux and macOS?

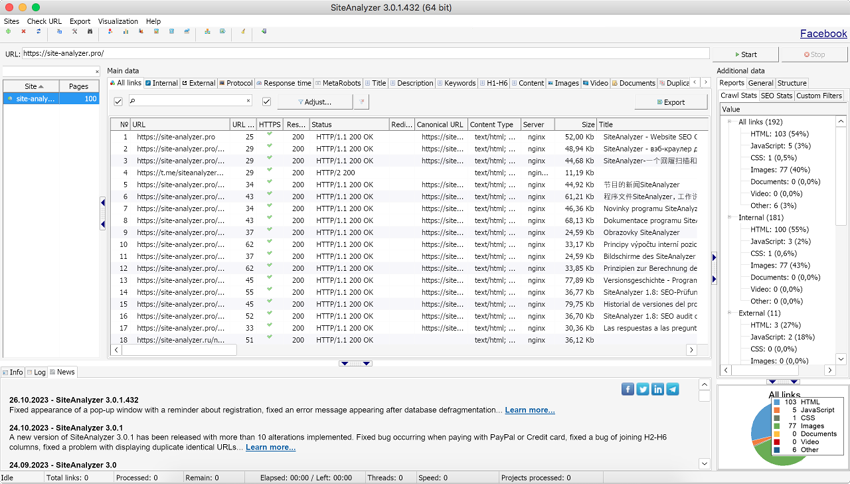

Why SiteAnalyzer is better than its analogs (Screaming Frog, Sitebulb, Netpeak Spider, etc.)?

We believe that SiteAnalyzer stands out from the competition in the following key ways:

- Fast and efficient scanning – Our tool delivers high-speed performance that rivals or exceeds leading competitors.

- Convenient project management – Unlike most competing tools that require loading projects one at a time, SiteAnalyzer lets you manage all your projects in a single, organized list.

- Powerful features at a great value – Around 80% of SiteAnalyzer’s functionality matches that of premium paid tools, giving you access to all the essential site analysis features without the high cost.

In the near future, we plan to introduce all the essential tools found in competing products, while adding our own innovations to further improve usability and functionality.

Can SiteAnalyzer Scan 1 Million Pages or More?

Yes, SiteAnalyzer is designed to handle large-scale website crawls.

Below are results from real-world tests conducted on two different systems:

- Windows XP x32 (3 GB RAM):

- HTML Pages Scanned: 92,000

- Total Analyzed URIs (pages, images, scripts, documents, etc.): 296,316

- Elapsed Time: 2 hours 50 minutes

- Windows 10 x64 (8 GB RAM):

- HTML Pages Scanned: 118,000

- Total Analyzed URIs (pages, images, scripts, documents, etc.): 2,334,260

- Elapsed Time: 6 hours 12 minutes

As shown in the results, SiteAnalyzer can process websites of significant size, with performance scaling based on available memory. The more RAM your system has, the larger the number of pages you can scan efficiently.

With proper hardware configuration, scanning over 1 million pages is not only feasible – it's achievable.

P.S. Share your scan results (screenshots welcome) and we might feature them in this FAQ!

How Many Iterations Are Recommended for PageRank Calculation?

The accuracy of the PageRank calculation improves with more iterations – the more you use, the smaller the margin of error.

For example, by the 10th iteration, changes in page weight typically reach the level of thousandths or even ten-thousandths – values so small they can usually be ignored without affecting practical results.

Even just 2-3 iterations are often enough to observe meaningful differences in page authority.

Our recommendation:

Use 10 iterations for optimal balance between accuracy and performance.

How to Preserve All Projects When Updating SiteAnalyzer

In most cases, your existing projects and scan results are preserved when updating SiteAnalyzer. However, during major updates that involve changes to the database structure, you may need to rescan certain projects.

To ensure a smooth transition when updating:

- Run the current version of SiteAnalyzer.

- Select the projects you want to keep from the project list.

- Copy them to the clipboard using the context menu.

- Launch the new version of SiteAnalyzer.

- Paste the copied URLs into the new project list (click the + icon and choose Paste).

- Rescan the projects if required due to structural changes.

- Profit! You're now up and running with all your key projects intact.

This method ensures you retain full control over your workflow during updates.

What Does "Lack of Resources" in the Program Log Mean?

During large-scale scans using a high number of threads, you may encounter the following error in the log: "The project scan is stopped due to lack of computer resources. We recommend changing the scan settings".

This message appears when the system runs low on available resources (such as memory or processing power) while scanning. To prevent crashes and ensure data integrity, SiteAnalyzer automatically pauses the scan.

Why does this happen?

- Scanning very large websites.

- Using too many concurrent threads.

- Limited RAM or running a 32-bit OS.

How to avoid it:

- Increase system RAM.

- Switch to a 64-bit version of Windows.

- Adjust scan settings in the program:

- Reduce the number of threads.

- Set a limit on the maximum number of pages to crawl.

These adjustments help maintain stable performance, especially during intensive audits.

Sites are not scanned through Tilda, why?

When scanning Tilda-built sites, you may encounter a 307 Temporary Redirect – caused by Tilda’s built-in DDoS protection. This system blocks automated tools like SiteAnalyzer to prevent abuse.

Workaround:

Try increasing the request delay (e.g., 5-10 seconds) in SiteAnalyzer settings. However, success is not guaranteed, as Tilda’s security measures are designed to block crawlers by default.

Official Statement from Tilda Support: The 307 redirect is triggered by our integrated DDoS protection system. If a site scanner is used, the protection mechanism activates more frequently. In this case, a 'human' verification check was triggered, and the service failed to pass it. We cannot disable this protection system, as it is essential for the security of all sites hosted on our platform. The protection is automatically disabled for known search engine bots.

Can SiteAnalyzer Scan Websites Built with JavaScript?

Q: I’ve added a site for scanning, but the program only detects a few pages – even though the site has a large catalog with over 3,000 pages. What am I doing wrong?

A: It looks like the site is built using JavaScript, and currently, SiteAnalyzer does not support rendering JavaScript-generated content. As a result, it can’t detect or follow links that are dynamically loaded by JavaScript – which leads to incomplete scans.

Support for JavaScript rendering is planned for an upcoming version, so stay tuned for future updates!

Why Does the Scanner Detect 403 Errors When Pages Load Fine in Browser?

Q: During scanning, the program detects many 403 errors, but when I visit those pages in a browser, they load fine with a 200 OK status. Why is that?

A: If the site is built on Bitrix, it likely has a built-in DDoS protection module that blocks frequent requests from the same IP address. As a result, the scanner may receive 403 Forbidden responses after repeated access attempts.

Over time, the server may allow access again, which is why the same page might later return a 200 status. To bypass this limitation, you can use proxies to distribute requests across multiple IP addresses.

How Many Threads Are Optimal for Parsing Large Sites?

Q: I recently parsed a site with over 100,000 links – first with a lower thread count, then again using 40 threads. The second attempt went well. What’s the ideal number of threads for such large-scale parsing? Also, does increasing the number of threads raise the risk of missing broken links?

A: Increasing the number of threads boosts speed, but also increases server load – raising the risk of errors like 500 Internal Server Error. That’s especially true if the server isn’t powerful enough to handle concurrent requests.

As a rule of thumb: Start with 10 threads for stable performance. If the server can handle it, you can safely increase to 20 or more. Keep in mind that high thread counts also impact disk write speed. With many threads running, a traditional HDD may become a bottleneck – so an SSD is strongly recommended for large crawls

The Key Is Already Registered – What to Do?

If the program shows that your license key is already in use, follow these steps to resolve the issue:

1. Go to your Personal Cabinet.

2. Navigate to the Devices section.

3. Remove all registered devices.

4. Return to the program and re-enter your license key.

This will clear any previous associations and allow you to activate the program successfully.

The Program Won't Scan the Site – What Should I Do?

If SiteAnalyzer is unable to scan your site, try changing the User-Agent setting to mimic a search engine bot (such as Googlebot) or a popular browser.

You can find this option in the program settings under the User-Agent section.

Switching the User-Agent can help bypass server restrictions and allow the scan to proceed normally.

How Can I Scan a Site Protected by Cloudflare?

Q: I'm trying to scan my own website, which uses Cloudflare (a common DDoS protection service), but I keep getting a 403 error. How can I bypass this?

A: To allow SiteAnalyzer to scan a site behind Cloudflare, you can create an exception in your Cloudflare settings.

Steps:

1. Go to Cloudflare Dashboard.

2. Navigate to Security > WAF > Create a Firewall Rule (screenshot with an example).

3. Set up a rule to bypass or disable restrictions for SiteAnalyzer’s requests.

This will prevent Cloudflare from blocking the crawler during your scan.

Always ensure that any changes to your firewall rules are temporary and secure, especially on live sites.

Can't Register the Program? Here's What to Do

If you see the error "Failure when receiving data from the peer" when entering your license key, it usually means SiteAnalyzer is unable to connect to the validation server.

This can happen if your firewall or antivirus software is blocking the connection or the program isn't added to the list of trusted applications.

To fix this temporarily disable your antivirus/firewall, then try entering the key again. If it works, add SiteAnalyzer to the exceptions/trusted apps list. This will allow the program to validate your license without interruption.

How to Limit Site Scanning to 4 Requests Per Second

Q: How can I configure SiteAnalyzer to scan a site at no more than 4 requests per second?

A: To set the scan rate to approximately 4 requests per second, use the following settings:

Number of threads: 1

Requests interval: 250 milliseconds

This ensures one request is sent every 250 ms, resulting in around 4 requests per second.

SiteAnalyzer System Requirements

SiteAnalyzer is designed to run smoothly on a wide range of systems and does not require high-end hardware.

Operating System: Microsoft Windows XP and above.

Supports: Windows 11, 10, 8, 7, Vista, and XP (both 32-bit and 64-bit versions).

You can confidently run SiteAnalyzer even on older machines for reliable website auditing – no powerful hardware required.

Is SiteAnalyzer Compatible with Linux and macOS?

Currently, SiteAnalyzer is designed to run on Windows and we do not offer native versions for Linux or macOS.

However, you can still use SiteAnalyzer on these platforms using compatibility tools:

- On Linux: Run SiteAnalyzer using Wine, an open-source compatibility layer that supports many Windows applications. Learn more about Wine.

- On macOS: Use CrossOver, a macOS-compatible tool that allows you to run many Windows applications seamlessly. Learn more about CrossOver.

These solutions provide a practical workaround for using SiteAnalyzer outside the Windows environment.